Over the last

couple of months we have seen customers increasing investigating the strategies to answer this question: “how to enable alignment

across “level 3” operational applications”.

This area of aligning the level 3

applications without rip and replace will become one of the core requirements

in making Manufacturing Operations Management sustainable and effective.

Syncing between

systems, people look at data warehouses , they do manual binding, but these are

just not practical in a sustainable and every changing world. There are many

systems usually upwards of 20 + systems which come from different vendors and

even if they do come from the same vendor they implemented by different

cultures in the plants. The thought pattern on “just asset naming” is different

between these groups.

Again Borrowing from As Gerhard Greeff – Divisional Manager at Bytes Systems Integration

put it in his paper"When last did you

revisit your MOM?"

MDM or Master

Data Management is the tool used to relate data between different applications.

So what is master data and why should we

care? According to Wikipedia, “Master Data Management (MDM) comprises a set of

processes and tools that consistently defines and manages the non-transactional

data entities of an organization (which may include reference data). MDM has

the objective of providing processes for collecting, aggregating, matching,

consolidating, assuring quality, persisting and distributing such data

throughout an organization to ensure consistency and control in the ongoing

maintenance and application use of this information.”

Processes commonly seen in MDM solutions

include source identification, data collection, data transformation,

normalization, rule administration, error detection and correction, data

consolidation, data storage, data distribution, and data governance.

Why is it

necessary to differentiate between enterprise MDM and Manufacturing MDM (mMDM)?

According to MESA, in the vast majority of cases, the engineering

bill-of-materials (BOM), the routing, or the general recipe from your ERP or

formulation/PLM systems simply lack the level of detail necessary to:

1. Run detailed routing through

shared shop resources

2. Set up the processing logic

your batch systems execute

3. Scale batch sizes to match

local equipment assets

4. Set up detailed machine

settings

This problem is

compounded by heterogeneous legacy systems, mistrust/disbelief in controlled

MOM systems, data ownership issues, and data inconsistency. The absence of

strong, common data architecture promotes ungoverned data definition

proliferation, point-to-point integration and cost effective data management

strategies. Within the manufacturing environment, all this translates into many

types of waste and added cost.

The master data

required to execute production processes is highly dependent upon individual

assets and site-specific considerations, all of which are subject to change at

a much higher frequency than typical enterprise processes like order-entry or

payables processing. As a result, manufacturing master data will be a blend of

data that is not related specifically to site level details (such as a customer

ID or high-level product specifications shared between enterprise order-entry

systems and the plant) and site-specific or “local” details such as equipment

operating characteristics (which may vary by local humidity, temperature, and

drive speed) or even local raw material characteristics.

This natural

division between enterprise master data and “local” or manufacturing master

data suggests specific architectural approaches to manufacturing master data

management (mMDM) which borrow heavily from Enterprise MDM models, but which

are tuned to the specific requirements of the manufacturing environment.

Think of a

company that has acquired various manufacturing entities over time. They have

consolidated their Enterprise systems, but at site level, things are different.

Different sites may call the same raw material different things (for instance

11% HCl, Hydrochloric acid, Pool Acid, Hydrochloric 11% etc). Then this same

raw material may also have different names in the Batch system, the SCADA, the

LIMS, the Stores system, the Scheduling system and the MOM. This makes it

extremely difficult to report for instance on the consumption of Hydrochloric

Acid from a COO perspective, as without a mMDM for instance, the consumption

query will have to be tailored for each site and system in order to abstract

the quantities for use.

The alternative

of course is to initiate a naming standardization exercise that can take years

to complete as changes will be required on most level 2 and 3 systems. That is

not even taking into account the redevelopment of visualization and the

retraining of operators. The question is, once the naming standardization is

complete, who owns the master naming convention and who ensures that plants

don’t once again diverge over time as new products and materials are added?

The example

above is a very simple one, for a raw material, but it can also be applied to

other area.

But when you are

talking to customers you see comments and projects and so many are trying to

deal with this issue without really looking at the big problem and plan.

If a company has

for instance implemented a barcode scanning solution, the item numbers for a

specific product or component may differ between suppliers. How will the system

know what product/component has been received or issued to the plant without

some translation taking place somewhere? mMDM will thus resolve a lot of issues

that manufacturing companies are experiencing today in their strive for more

flexible integration between level 3 and level 4 systems.

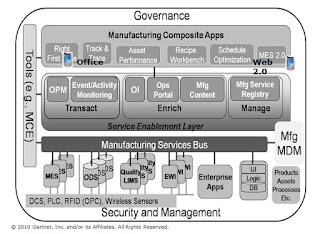

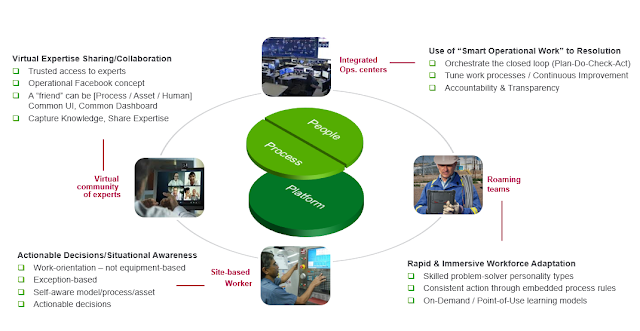

The objective of

the proposed split in architecture is to increase application flexibility

without reducing the effectiveness and efficiency of the integration between

systems. It also abstracts the interface mechanisms out of the application into

services that can operate regardless of application changes. This will get rid

of numerous “point-to-point” interfaces and make systems more flexible in order

to adapt to changing conditions. The mSOA architecture also abstracts business

processes and their orchestration from the individual applications into an

operations business process management layer.

Now, one person is able to interact with multiple applications to track or manage a production order without even realizing that he/she is jumping

between applications.

Even with mSOA and mMDM, integration will not be efficient and effective unless message structures

and data exchange are in a standard format. This is where ISA-95 once again

plays a big part in ensuring interface effectiveness and consistency. Without

standardized data exchange structures and schemas, not even mMDM and mSOAm will

enable interface re-use.

ISA-95 part 5 provides standards for information exchange as well as standardized data

structures and XML message schemas based on the Business-to-Manufacturing

Mark-up Language (B2MML) developed by WBF, including the verbs and nouns for

data exchange. Standardizing these throughout the manufacturing operations

ensures that standard services are developed to accommodate multiple

applications. Increasingly we are seeing the process industries such as Oil and Gas, Mining, looking towards these standards, and developing them to address this growing challenge of expansion, vs sustainability.